1 What Constitutes Data?

“Data, data everywhere, but not a thought to think.”

- John Allen Paulos

It is reasonably common at this point in time in the biological sciences to focus perhaps too much attention on the sharing of large datasets, especially sequencing based datasets. These are important datasets to share, but they are far from all the data that we generate. Indeed they are largely useless if not accompanied by sufficient experimental metadata to contextualize these datasets and make it possible to interrogate them for biological differences.

1.1 A brief overview of sources/types of data

1.1.1 Results Outputs

- Sequencing Data

- e.g. Genomic, RNA-seq, ChIP-seq, ATAC-seq, & their Single Cell variants

- Imaging Data

- e.g. wide field, confocal, Electron Microscopy

- ‘Bench’ data

- e.g. gels, blots, various forms of small tabular data of counts & scores

- Numerous others, e.g. Flow cytometry, X-Ray crystallography data

1.1.2 Process Outputs

- Protocols

- Step-by-step procedure to reproduce the experimental work including any relevant details e.g.:

- Biological resources e.g. strains, cell lines, tissue samples

- Volumes, concentrations, container sizes, amounts, temperatures

- Chemicals, Reagents, enzymes, & antibodies

- Equipment/kit, make, model, software versions (where potentially relevant)

- Tutorial, Video, & Training content conveying how a method is carried out in practice including details not readily captured in the written medium.

- Step-by-step procedure to reproduce the experimental work including any relevant details e.g.:

- Code

- Scripts & software packages

- pipelines, templates, & workflows

- Computational Environments

- Software dependencies and versions

- Approximate computational resources required

- minimum hardware requirements & run times

1.2 Metadata

“Metadata Is a Love Note to the Future”

- unattributed

Meta data is the context needed to: find, use, and interpret data

Robust metadata is essential to making data FAIR.

Findable1

Have a globally unique Identifier e.g. a DOI (Digital Object Identifier)

Be indexed in searchable resources

Have ‘Rich’ metadata

Accessible

Be retrievable using a standard open protocol

(Some) metadata remains accessible even when the data is not2

Interoperable

Use open formally specified languages or formats

Use vocabularies / ontologies which are themselves FAIR

Reference other (meta)data

Re-usable

In more concrete terms the sort of information that needs to be present or referenced in a dataset’s metadata to make it most FAIR is:

- Experimental Design/Question/Motivation

- Organisms/Lines/Strains, Reagents etc.

- File format and structure information

- Technical information from Instrumentation

- How the instrument was configured during the data acquisition.

- Its make, model and embedded software versions.

- Researcher Identity

- Human names are not globally unique identifiers and there are plenty of researchers with the same name. If you do not have one already get at ORCID and have it associated with all of your publications.

- The protocols used in its generation

- The code used to analyse it

I used the term ‘Rich’ for succinctness under the description of what makes data findable. ‘Rich’ is unfortunately somewhat vague, this is because ‘richness’ is highly context dependent. The type of data and experiment that you have determines which metadata are essential for its effective FAIRness.

Which keywords make it most findable? This might be biological, technical or social. You talked to some at a conference who mentioned a cool dataset and you remember their name and the name of the technology they used. Can you find the dataset with “John, Smith” and YASSA-seq (yet another stupid sequencing acronym sequencing)?

Which biological information about the samples is most needed in a different analyses of the data?

Which file format is most widely compatible, or has the best features for accessing subsets of large datasets over a network?

Is the license on the data clearly indicated so that I know I’m legally allowed to re-use it? (see Chapter 8)

All of these details provide additional characteristics which enhance the findability of data by improving your ability to search for it and filter the results. If your metadata is ‘RNA-seq’ then there are millions of results. If it is RNA-seq (PolyA), Mouse <strain>, 12 samples 6 Control, 6 <Homozygous Mutant X>, aged 2 months etc. etc. I can, assuming it is well structured for search tools to parse, actually find out if I can use your data to answer my question. I may even be able to do so using a fairly simple search tool and no manual trawling through hundreds or thousands of hits. On a self-interested note, people can only cite your data if they can actually find it.

The Goal, In short, is full provenance of the results & conclusions leading step-wise from the exact materials and practical steps taken in the experiment, through the complete logic of the analysis steps to the conclusions drawn. In modern science this is long chain but it must be a complete one or it’s not good science because it’s not at least theoretically possible for someone to pick up the description of your work and check your conclusions independently for themselves. I’ve read papers in the modern literature with less useful methods sections that this alchemical description of the preparation of phosphorus written by William Y-Worth in 16923 don’t be one of these people!

1.2.1 Ontologies & Controlled Vocabularies

In philosophy the field of ontology concerns itself with the groups and categories of entities that exist, this often involves much disputation over which of these are basal and which merely comprised of collections of other things.

The practical application of these concepts in knowledge management and computer science is to have shared common definitions of the things that we work on so that we don’t run into the problems of talking about the same things with with different names or using the same name to refer to different things. As well as having standard categories of things so we can refer to groups of things with same names as well as individual entities. These groups can also be useful for analyzing, visualizing and summarizing large collections of entities.

There are ontologies for Genes, proteins, organisms, reagents, methods, technologies, file types, clinical phenotypes and much more which which makes it possible to refer to common entities and importantly makes it possible to perform effective searches of data labeled with ontology terms.

Almost everyone has encountered the problem of searching for all the instances of a word in a document and run into the issue of inexact matches. Consider the problem of finding all the instances of the word “color” in a document what about variants on this word like “colors”, “Color”, and the British English spelling “colour”4. What about conjugations like “colouring” or “coloured” There’s capitalization, plural, conjugation, international spellings etc. We rapidly run in to trying to use ‘fuzzy’ matching to find all the instances we may miss some (false negatives) or match some things that we didn’t intend to e.g. “discoloration” (false positives). These different versions may mean slightly different things and the problem quickly gets messy. Ontologies provide standardized human (NCBI:txid9606) and machine readable names to refer to the same things to avoid the many variants on this sort of matching problem. For each instance of and variant on the word “color” I’ve used here I linked to it’s latest definition in Wiktionary so that is clear that they all refer to the same concept.

Ontologies enable the searching of literature and databases, identifying the links and relationships between entities in literature, databases, data repositories and other places. This facilitates research as it becomes much easier to identify all the different places in different mediums which refer to the same entities letting you find sources and resources relevant to your work and letting computational analysts mine the many sources of data on a subject with much greater ease.

EBI maintains a database and search tool for biological ontologies at the Ontology Lookup Service (OLS). Many biological ontologies are maintained and developed at the Open Biological and Biomedical Ontology Foundry (OBO Foundry) Ontologies are living documents and must be updated and extended as new entities are described and definitions are revised etc. If an entity that you need to talk about in your work does not yet exist in an ontology you should consider contributing it to a suitable one via OBO Foundry.

A source for exploring more general web vocabularies is linked open vocabularies where you can find vocabularies for describing many different things. These vocabularies are generally represented in Web Ontology Language (OWL).

Another fun and relatively simple example of an ontology is CiTO (Shotton 2010, [cito:discusses] [cito:citesAsAuthority]) an ontology for describing the nature of citations. It can represent things like if the author agrees or disagrees with the conclusions of the paper that they are citing, or are using methods or data from the cited source. This was recently adopted in a trial by the the Journal of Cheminformatics(Willighagen 2020, [cito:citesAsAuthority] [cito:agreesWith]). I include these examples inline here but they would typically be represented in the bibliography not in the body of the text, though having them prominently placed in a tooltip when a citation is hovered over might also be informative for the reader.

1.2.2 Metadata models, Schemas, and Standards

I’m quite new to the theory and practice of more formal metadata standards & models contributions and corrections from those with more experience and expertise would be welcome.

I have a dream for the Web [in which computers] become capable of analyzing all the data on the Web – the content, links, and transactions between people and computers. A “Semantic Web”, which makes this possible, has yet to emerge…

- Tim Berners-Lee (1999)

Luckily for many domains someone has already done the work of devising suitable metadata models often based on the principles I’m going to briefly outline below and you can take these ‘off the shelf’ and use them to describe your data. Unfortunately there may be multiple competing standards and formats for metadata in your domain and whilst the standards may exist the tooling to use them is not always very user friendly especially for the non-programmer. Hopefully the following section will aid you in the selection of a good metadata standards to adopt in your own work and provide a starting point if you need to devise one from scratch.

Where you choose to make your data available often determines the minimum accompanying metadata and in what standard or format it is made available. We will detail metadata requirements and guidelines associated with different data publishing venues in Chapter 6Where to publish data. Many offer the option to include additional metadata or supplementary files which could contain metadata in standards other than those directly supported by the data repository but which might be valuable to others re-using your data. E.g. metadata that is not in the readily included in the format supported by the repository about your experimental design that is stored in a file with your data and parsed by your analysis code.

The aspiration of a ‘semantic’ web in which all data on it are meaningfully annotated, interconnected and readily both machine and human interpretable dates back to the same era as some of my earliest memories of using the web in the late 1990s. A little more that 20 Years on and this is still yet to be fully realized, but much work has been done and many people and organisations are still pushing towards this goal.

The artefacts of scholarly publication, be they articles or datasets, are a subset of the artifacts that exist on the internet and may need to refer to and interoperate with things outside confines of the network of scholarly citations. Consequently it makes sense to consider the problem of how to annotate them with structured metadata in the wider context of the ‘semantic web’. Firstly looking at the scope of this larger problem can be an excellent way of feeling better about the magnitude of your own problem space. Secondly, we can take some lessons and concepts from this work to inform our thinking about our particular subset of this wider problem.

The World Wide Web Consortium (W3C) develops standards for the web including the absurdly general problem of developing standards for the ‘semantic web’ or ‘web of data’. Their standards are generally constructed from the same set of primitives. Specifically ‘semantic triples’ the atomic unit of the Resource Description Framework (RDF) data model. A triple takes a Subject-predecate-object form, similar to an Entity-Attribute-Value form. For Example: “Alice Is 27”, “Alice Knows Bob”. Importantly triples can themselves be objects allowing triples to be composed into arbitrarily complex knowledge graphs, e.g. “Bob Knows (Alice is 27)”.

RDF is not a particularly efficient representation in terms of storage or the computational ease with which it can be queried. It can be queried using the SPARQL language but this rapidly gets slow and verbose with higher complexity. The advantage of RDF is that is is essentially fully general, thus it lends itself to the the direct representation of highly unstructured data about which you can make few assumptions and bridging or translating between disparate domains.

Developing an RDF based description of your domain specific data description problem can be a good conceptual tool. Even if it is not a representation that you will use day to day. Creating and RDF representation necessitates thinking about what explicit types of entities and relationships that your domain needs in order to be described. You can likely devise a more efficient representation which can make implicit some of the things that you would have to state explicitly to fully capture your system in RDF.

Whether you start with RDF and devise a simplified representation that makes more assumptions about your system or translate a simpler representation in RDF the ability to translate between RDF and a domain specific representation is useful for interfacing with and being indexed by more domain agnostic tools. Especially if your RDF has overlapping types with schemas from organisations like schema.org or can be readily translated into the Wikidata format. Wikipathways is a good example of a biological sciences project making use of Wikidata.

Founded by a number of large internet search providers schema.org provides a general purpose RDF schema with a set of types and classes of entities and relationships between them. There are also community extensions to the base schema.org schemas such as bioschemas.org.

Conforming to an existing schema, or extension of a schema is a good way to enforce compliance of a metadata description with a standard model of that class of data. As automated tools can be used to check if a representation conforms to a schema. The Shape Constraint Language SHACL is a more recent and rigorous language for describing the constraints on the properties of an RDF representation. Such constraints are useful when you are building tooling around metadata that has to make more assumptions about it’s structure than merely that it is RDF for reasons such as performance and ease of use.

It is often possible or necessary to attempt to translate from a domain specific niche representation often with more details than can be represenented in a more general metadata model to such a more general model. This generally mean using a overlapping subset of features present in both models and some conceptual mapping that can related sufficiently similar categories that exist in both models

When representing metadata json-ld is the new format preferred by many developers for representing linked data as it is very human readable and relatively easy to work with ‘by-hand’ compared to XML-like formats for representing linked data like RDFa.

Including a description of your data in json-ld format in the header of an html document that conforms to the standards of schema.org permits that page to be more effectively indexed and mined by major search engines, aiding the discover-ability of pages marked-up in this fashion.

For more see the linked data glossary.

Ontology - A formal description of the things which exist (classes), the relationships between them (properties), and the logical properties by which they can be combined (axioms).

Schema - A definition of the structure and contents of a data model. Often a description of constraints which would make a given representation of some data a valid instance of a specific data model. i.e. a file could be validated against a schema to check if it is a valid instance of a model.

Serialization format - A file format for representing a conceptual model. RDF for example is an abstract conceptual model for representing data RDFa, Turtle, and JSON-LD are textual conventions for representing the same semantic information.

Standard - agreed set of norms often accompanied by formal specifications to facilitate conforming to these norms.

Data model(ing) - Creating a representation of domain specific knowledge which faithfully represents that knowledge so that is can be formalized into some form of standard representation.

Controlled Vocabulary - A curated set of terms to describe units of information. Often a standardized word or phrase and unique identifier linked to a description and synonyms. Used taxonomies & ontologies.

The importance of good metadata standards is scale independent from the entire internet to the intimate details of the structure of image file formats which we will touch on when we discuss the OME-NGFF (Next Generation File Formats). If the world of formally structured metadata is a bit much you can start small by just adding some simple key value pairs to some table or entries that accompany your published data like “Species (key): human (NCBI:txid9606) (value)” or

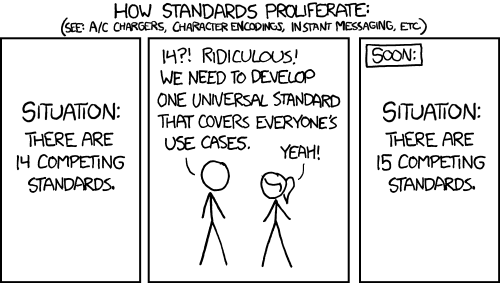

Pragmatically technical and theoretical considerations for what might be considered the best format for your metadata are often trumped by ease of use / good tooling and wide adoption in a particular community. As a general rule It is best to use the community standard and participate in community efforts at reforming, re-factoring, or extending them if they are lacking in some way. Beware the proliferation of standards problem:

1.2.2.1 The ISA (Investigation, Study, Assay) metadata model

How can we approach systematically organizing such a complex and heterogeneous collection of metadata? This is an extremely challenging problem which does not have a definitive answer. There are a number of standards, none universal in scope. Systematic machine readable representation of this metadata is however essential to achieving the F in FAIR (Findable) data cannot be properly systematically indexed & searched without structured metadata.

One schema proposed for the representation of biological experiments is the ISA model (Sansone et al. 2012 [cito:citesAsAuthority]):

- Investigation

- A high level concept linking together related studies

- Study

- Information on the subject under study, it’s characteristics and treatments applied

- Assay

- A test performed on material take from the subject of the assay which produces a qualitative or quantitative measurement

This provides a high level framework for structuring your experimental metadata which is extensible to accommodate a variety of more specialized descriptions within it or referenced by it.

A strategy for gauging how find-able your data is: Get a student in your lab or a neighboring one with a passing familiarity with your project to try and retrieve your data from the internet without using your name. Can they do it at all?, how long did it take?, What would have made it easier?, How would you search for your dataset if you were trying to perform an analysis like yours on publicly available data?↩︎

It’s useful to know that data exists even if it’s not publicly accessible so that you can ask to see it, if you don’t even know it exists you can’t ask.↩︎

Note that this published method for the preparation of phosphorus was a full 23 years after it was first produced by Hennig Brandt in 1669 - history doesn’t repeat itself but it sure does rhyme.↩︎

This text defaults to the American English spellings mostly because I was too lazy to change the default spell checker settings.↩︎