5 When To Publish Data

There are varying norms in the academic community about when in the research cycle to publish your data. In the biological sciences today the most prevalent practice in my experience is data release at time of publication. At least for individual research projects. For large consortia producing sequencing data it is more common for data to be released shortly after it is generated as laid out in The Fort Lauderdale Principles which we will be covering and critiquing in section Section 5.1.

There are a number of good reasons to publish data (almost) as soon as it is generated instead of waiting until time of publication that we will cover below.

We will be addressing considerations of privacy & consent in Chapter 7 What Data to Publish.

5.1 The Fort Lauderdale Principles

The Fort Lauderdale principles arose from a meeting of ~40 people organised by the Wellcome trust to discuss pre-publication release of data in the field of genomics. These principles have become the basis for many data release policies in consortia in the biological sciences since. The Fort Lauderdale Report, a 4 page summary of the conclusions of this meeting, was produced approximately 20 years ago at time of writing in January of 2003.

The report lays out the responsibilities of 3 groups: Funders, Producers & Users of data.

Here is a summary of these responsibilities:

- Funders are tasked with ensuring that:

- Project descriptions are published

- Requiring that data be publicly released as a condition of funding

- Encouraging participation in community resource projects

- Providing a centralized index of community resource projects

- Providing centralized repositories for the data

- Resource producers with:

- Publishing a project description

- Producing data of consistently high quality

- Making the data immediately available without restrictions

- Recognize that there may be violations of the norm of not publishing ‘global’ analyses prior to those generated by the data generators

- Resource users with:

- Citing the project description

- Not publishing ‘global’ analyses of the data before its producers do

- Ensuring that these norms are adhered to by the community

These guidelines contain mostly very sound recommendations and have largely served the community very well, indeed data sharing in genomics is ahead of many other domains, imaging for example is only now beginning to catch up. There are, however, some distinctly problematic aspects of these recommendations, and there is much room to continue to improve our best practices.

Specifically the section on the recommendations to ‘resource users’ contains language which could not too uncharitably be interpreted as advising ‘resource users’ to punish those who violate the norm of allowing ‘resource produces’ to be the first to publish ‘global’ analyses of the datasets that they generated. Implying means such as holding up review of publications and/or grants to do so. This is of course totally unacceptable.

One of the issues with this approach to the enforcement of these norms is that more senior researchers can more readily enforce them on Junior researchers than vice versa. Senior researchers are more likely to sit on grant awarding bodies and be requested as reviewers on papers giving them more access to these tools. Junior researchers are hesitant to release data prior to publication for fear that larger and more well resourced groups may be able to analyse their data and shepherd a manuscript through the publication pipeline before they are able to do so. This potentially reduces the ‘novelty’ of their findings as prior work has now been published with their datasets. This need not, and likely rarely is, malicious on the part of other researchers who have simply identified suitable datasets to use in their analyses. Even if in reality this is an infrequent occurrence, concern over the possibility suppresses researchers willingness to share data.

Since the Fort Lauderdale report was authored changes in the technology have meant that many more researchers are producing datasets at a scale which could reasonably be considered a ‘resource’. Whole international consortia in 2003 may have produced less data than some individual PhD projects in 2023. We are almost all ‘resource producers’ now, thus to preserve the practice of pre-publication release of data resources, and the benefits this has for the FAIRness of data and the pace of scientific investigations, we must fix the incentives issues around individual pre-publication data sharing.

I would suggest that 20 years on these principles are in need of a bit of an update to reflect a more constructive attitude to the use of public data. The updated advice that I would recommend is to follow a different workflow which preserves the publication precedence of the ‘resource producers’ without restricting general access to data shortly after its generation. This approach is called registered reports, and they address more problems than just being hesitant to release your data prior to publication for fear of being ‘scooped’1.

5.2 Registered Reports

The centre for open science (COS) describes Registered Reports as “Peer review before results are known to align scientific values and practices”. There are over 300 participating journals where this format is accepted (including for example Nature (“Nature Welcomes Registered Reports” 2023 [cito:citesAsAuthority]), Nature Communications, PLOS Biology & BMC Biology). If a Journal that you would like to publish with does not yet support registered reports I would suggest writing to an editor there and requesting that they consider doing so, maybe get together with some colleagues and write a joint letter.

The workflow for a registered report differs somewhat from the conventional publication process. You effectively write a version of your introduction and methods sections prior to performing your main experimental work, submit this for review and secure an agreement in principle to publish your results irrespective of the outcome of your experiment.

To be clear this does not limit exploratory analysis just makes clear what is planned and what is post-hoc. This Prevents HARKing (Hypothesizing After the Results are Know)

One of the advantages to in-principle acceptance at stage 1 review is that it allows researchers to list publications much sooner than they could for a conventional manuscript, especially useful for early career researchers in a context where conventional publication can drag out over years.

For a computational analysis the study design phase would ideally include writing your analysis code using mock or example data and submitting running code as a part of the review, as was discussed in section Chapter 2 when to generate data. Once your data has been generated you are then ready to perform your planned analysis almost immediately using reviewed and tested code. Data would ideally be placed into a public repository before you run your main analysis prior to the 2nd review stage. Your data would also ideally be accessed from the repository by your analysis pipeline using it’s globally unique identifier(s). This serves to test the accessible and interoperable components of the FAIRness of your dataset. You don’t know if data is FAIR until you actually try and (re-)use it, if it’s first use follows the same pattern as anyone who might subsequently want to re-use it we have a clear demonstration of how the data can be re-used.

“In a world where research can now circulate rapidly on the Internet, we need to develop new ways to do science in public.”

Protocols you plan to use in your analysis perhaps developed and refined whilst generating some preliminary data can be shared on a protocol sharing platform such as protocols.io or via a tool like the OSF. These can be cited in your registered report, and because such platforms permit versioning if any further refinements are made in the course of the main experiment, can be updated and the new version cited in the final manuscript permitting people to see the revisions.

Whilst not applicable in every context the approach taken by registered reports needn’t apply only to the more narrowly hypothesis driven work, they can apply in contexts where more exploratory questions are being asked. For example if you are carrying out a screen like question looking for genes or pathways that differ between some experimental conditions it is still possible to lay out in advance your analysis plan and criteria for deeming a change of sufficient magnitude or significance. Though the acceptability of this approach may vary depending on the criteria for registration at specific publication venues.

This workflow puts thinking about the analysis and experimental design strictly before data collection. This helps to address common issues faced by data analysts with being consulted too late in the process to address issues in the experimental design, about which I opined in Chapter 2 When To Generate Data.

If you retroactively discover unanticipated problems with your design and think that different analytic methods than you planned may yield results that more accurately reflect the phenonomenon that you are working on, then present these alongside your planned analyses along with your reasoning about the improvements. Your entire analysis need not be constrained by you pre-registered plan. The plan merely servers as an accountability mechanism and as an exercise to ensure that you have thought deeply enough about how you are going to analyse your results before you embark on generating them. This workflow provides a much more robust mechanism against unintentionally preforming experiments that would be obviously bad, unnecessarily expensive and/or wasteful with the benefit of hindsight, something that occurs more often than we’d probably like to admit.

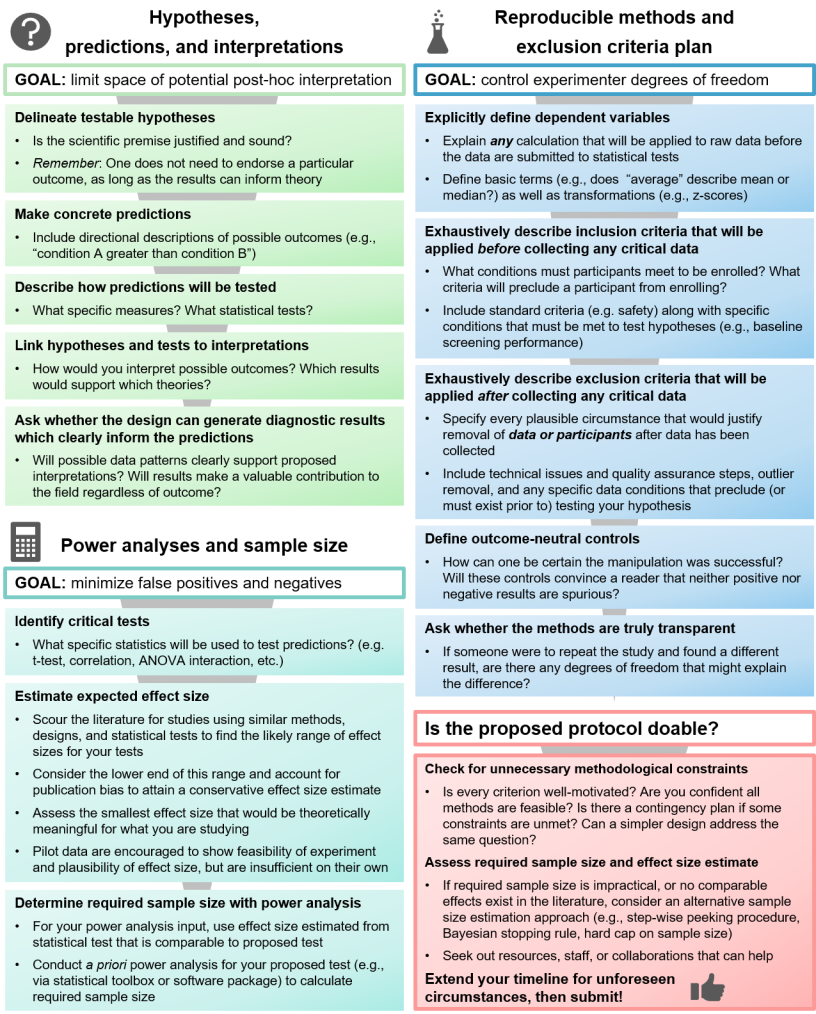

If you are considering doing a registered report I suggest that you refer to the section on the center for open science’s website and this practical guide to navigating registered reports from Anastasia Kiyonaga, Jason M. Scimeca that is summarized in the figure below.

5.3 Counterpoints to publication on data generation

5.3.1 Poor Quality data

A point against publishing data immediately on generation is potential quality issues and I would recommend performing basic quality control (QC) analyses before publishing a dataset along with your QC analysis. If your dataset fails QC so badly it’s a total write-off then you shouldn’t bother to publish but marginal samples should generally be included even if you exclude them from your analysis with the QC info that you used to make this call. Alternative methods may make data that fails QC for you still useful for someone else’s question.

Publishing QC information can be valuable data for anyone trying to perform meta-scientific analyses that might inform decision making of future researchers. for example if a particular instrument or preparation technique has a high rate of QC failure this is a consideration that could be important in picking sample numbers in a future experiment.

‘Scooped’ is a term we should excise from the academic lexicon, we are scientists not journalists we are ‘corroborated’ not ‘scooped’.↩︎